The statistical is not the practical

A guide to gauging the real world significance of computational methods

When I first entered the world of computational chemistry in what seems like the Middle Ages of the field, the way we compared methods carried the whiff of ritual. One group would present a new algorithm, slice a dataset into training and test sets, and show a modest but statistically “significant” improvement over the baseline. Another group would then counter with a different split or a tweak in hyperparameters that restored the balance. Back and forth the pendulum swung, each camp armed with p-values as if they were muskets in an endless duel. The duel continues, often obscured by the smoke of breathless press releases and company valuations.

This practice has now become so ingrained that few pause to ask: what do these numbers really mean in the messy world of drug discovery? Will a two-hundredths reduction in mean absolute error make a medicinal chemist’s decision easier? Will a correlation coefficient that is “significantly” higher by the third decimal place move a project forward? In most cases the answer is no, although you might have a hard time finding it in the thicket of publications coming out every day.

That is why a recent paper in the Journal of Chemical Information and Modeling from Pat Walters and his colleagues is so useful and...practical. Its authors argue that what matters in comparing machine learning methods for small-molecule property prediction is not statistical significance but practical significance. It is a deceptively simple point, but one that carries profound implications for how we judge progress in the field. The point is well-understood in medicine; for instance, a cancer drug that shows a slight statistical improvement in patient outcomes may translate to a one month increase in lifespan, rendering it practically marginally useful at best. But in machine learning and AI it’s not so easy to discern the differences.

Consider the classic ritual of the single train/test split. One dataset, one cut, one model evaluation. It is, as the paper notes, the computational equivalent of running a wet lab experiment with a single replicate. Any bench scientist would frown at this practice because such a comparison is fragile: change the split, and the “winning” method may suddenly lose. Yet the computational chemistry community has for too long tolerated this fragile practice.

The authors recommend what should become standard practice: 5 × 5 repeated cross-validation. Run your model not once, but 25 times, each on a different split. The result is not a single performance number but a distribution of outcomes. This performance distribution can then be compared across methods, much as experimentalists compare replicates in the lab. The virtue of this approach is that it respects the inherent stochasticity of both data and models. It also immunizes us against the seductive but misleading certainty of a single number.

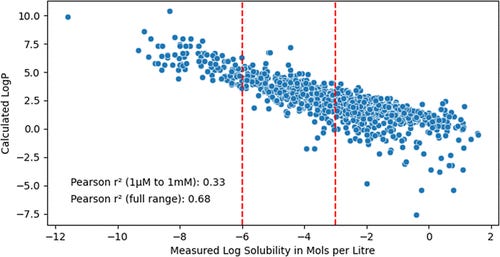

Even with replicates in hand, the temptation remains to hunt for p < 0.05. But again, the question is one of practical and not just statistical significance. In drug discovery, this translates to asking whether the difference matters to chemists and pharmacologists. If your model improves solubility prediction by 0.03 log units, is that a scientific breakthrough? Or is it a data point in the service of noise? The answer lies in framing results in units that practitioners care about: mean absolute error in log concentration, fold differences in potency, or the percentage of predictions within a two-fold error range. These carry the added benefit of being comprehensible to medicinal chemists, biologists and pharmacologists, people who comprise the natural audience of computational chemists and are the ones who are usually skeptical of the field. This shift from abstract significance to practical utility is subtle but transformative. It turns the conversation from an internal duel among modelers to a dialogue with the bench scientists who must ultimately use the predictions.

Metrics themselves are not innocent, though. A dataset with a wide dynamic range will make almost any model look good, while one with severe class imbalance can make accuracy meaningless. Good metrics can be reported for datasets with dynamic ranges that are not reflective of real world drug discovery. Reporting correlation coefficients without accounting for these effects is like reporting a telescope’s resolution without noting whether you are observing Saturn or a streetlight. The authors here call for honesty: choose metrics that reflect the actual challenges of drug discovery datasets. Only then can we tell whether an improvement is substantive or illusory and of academic interest.

History provides an illuminating parallel for the kinds of problems and solution proposed in this paper. For centuries, astronomers derived sweeping conclusions about the heavens from a handful of observations. Based on these observations you could make almost any model fit, whether heliocentric or geocentric or otherwise. It was only when they embraced repeated measurement - Tycho Brahe’s meticulous logs, for instance - that astronomy became a predictive science. Machine learning in chemistry is at a similar juncture. The chemists of the 18th century built credibility not by producing spectacular one-off reactions, but by publishing detailed recipes, impurities and all, so others could reproduce their work. Machine learning in drug discovery must aspire to the same ethos.

In the end, models are only useful if they help discover medicines. That is the practical significance that matters most — and it is one no p-value can capture.