On the failure of rescoring in virtual screening

Why automated virtual screening and docking remains hard and why expertise remains essential

This is a valuable study that should temper the enthusiasm of modelers applying docking-based virtual screening for protein targets while sparking some optimism on the utility of expert knowledge. In the last few years, the success of screening ultra-large virtual libraries in increasing hit rates has highlighted the potential utility of this approach. But with higher potential virtual hits also comes the problem of discriminating the right ones from the wrong ones.

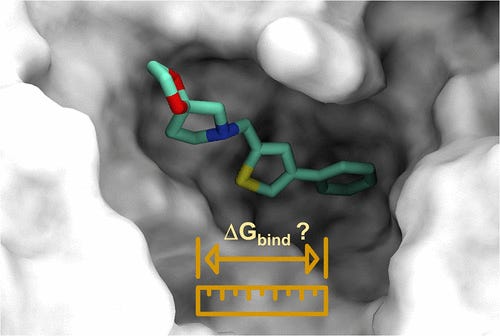

There are usually two problems inherent in assessing the feasibility of virtual screening hits, both hinging on false vs true positives. The first problem concerns the elimination of bad poses that display strained conformations, unsatisfied hydrogen bonds, polar groups in apolar pockets etc. But even when these poses are removed using appropriate filters and visual inspection, there's still the second problem of distinguishing true from false positives using the right score.

For a long time scoring functions have been widely known to be inaccurate (although they sometimes work in the context of a specific series), so practitioners use a variety of rescoring techniques that try to take into account high energies, solvation, conformational focusing (the fishing out of a protein-bound conformation from multiple solution conformations), entropy etc.. The hope is that this kind of rescoring that considers much more of the physics might be better than any raw scoring.

Unfortunately as the present study indicates, this is easier said than done. The authors use a variety of techniques including deep learning, quantum mechanical optimization and force fields to try to rescore hits from results across a variety of known ultra-large virtual screens. Careful statistics is applied to discriminate the true binders from the false ones. But as they conclude,

"True positive and false positive ligands remain hard to discriminate, whatever the complexity of the chosen scoring function. Neither a semiempirical quantum mechanics potential nor force-fields with implicit solvation models performed significantly better than empirical machine-learning scoring functions. Disappointingly, refining poses by molecular mechanics with implicit solvent only helps marginally. Reasons for scoring failures (erroneous pose, high ligand strain, unfavorable desolvation, missing explicit water, activity cliffs) have been known for a while and are reported again here, but cannot yet be globally addressed by a single rescoring method."

That's tough luck, because it means that automating any kind of scoring and rescoring process to yield reliable results is going to be hard, as is going to be consensus scoring where you think a variety of different rescoring functions agree on the same results. This of course does not mean that virtual screening is useless since there have been several successful campaigns that have not just improved both scaffold diversity and hit rates but have shown how bigger is often better.

I think the biggest take-home message from this study, as is apparent from several others, is that there is still no real substitute for an experienced computational chemist with good chemical intuition and expert knowledge, and even that isn't going to work all the time. But that's generally true of discovery of drug discovery as a whole. Sophistication of technique does not equate to better odds of success. No AI or physics-based protocol is going to replace the real experts any time soon. That might provide some solace, but it also means we have our work cut out for us if we are to generalize the full utility of computational techniques.