As I mentioned in a previous post, AI tools like LLMs have taken workflow creation and automation for computational chemistry to a new level for scientists who are not coding experts. Even for coding experts it can compress weeks or even months into hours. Both sets of scientists can then focus on the most important task at hand, which is creating molecules that are more likely to become drugs. As a computational medicinal chemist, I really want to spend my time on what’s the best bioisostere for a fragment in my compound, or how I might lock in the conformation of a particular part for improved binding affinity, not how I am going to address non-ASCII characters in my code using python3 vs 2.

I think this is a big deal, especially for small startups with tight timelines and urgent problem solving, or even for bigger companies which have had to employ teams of workflow automation specialists. Over the past few months I have created dozens of individual scripts as well as chunk-and-chain pipelines based on RDKit, Autodock, OpenMM and other tools using LLMs. The process has been deeply satisfying, although certainly not perfect and not free of headaches. Often the code works on 80% of inputs, and the other 20% can be a nightmare and lead you down a rabbit hole. But most of the times, with enough patience and iterations it gives you a robust set of scripts that you can use more or less reliably for standard comp chem protocols: molecule creation, sanitization and conversion, conformer generation, MD, docking etc. Below is a short account of what LLMs like Claude and chatGPT can accomplish and what were the common pitfalls encountered and the solutions. It’s worth emphasizing again that specialists in either the open-source tools themselves or software engineering would adopt different approaches or require fewer iterations, but many of us don’t belong to these categories.

Common Problems Solved:

File Format Nightmares: Anyone who’s worked with AutoDock Vina knows the pain of PDBQT format issues. The LLMs built automated converters that handle duplicate atoms, fix residue naming, and properly detect binding sites without manual intervention.

Batch Processing Failures: Running MD simulations on dozens of protein-ligand complexes inevitably leads to crashes. The LLMs implemented resilient batch processing with GPU hang recovery, automatic CPU fallback, and checkpoint restart capability. The system now processes 50+ structures overnight without babysitting.

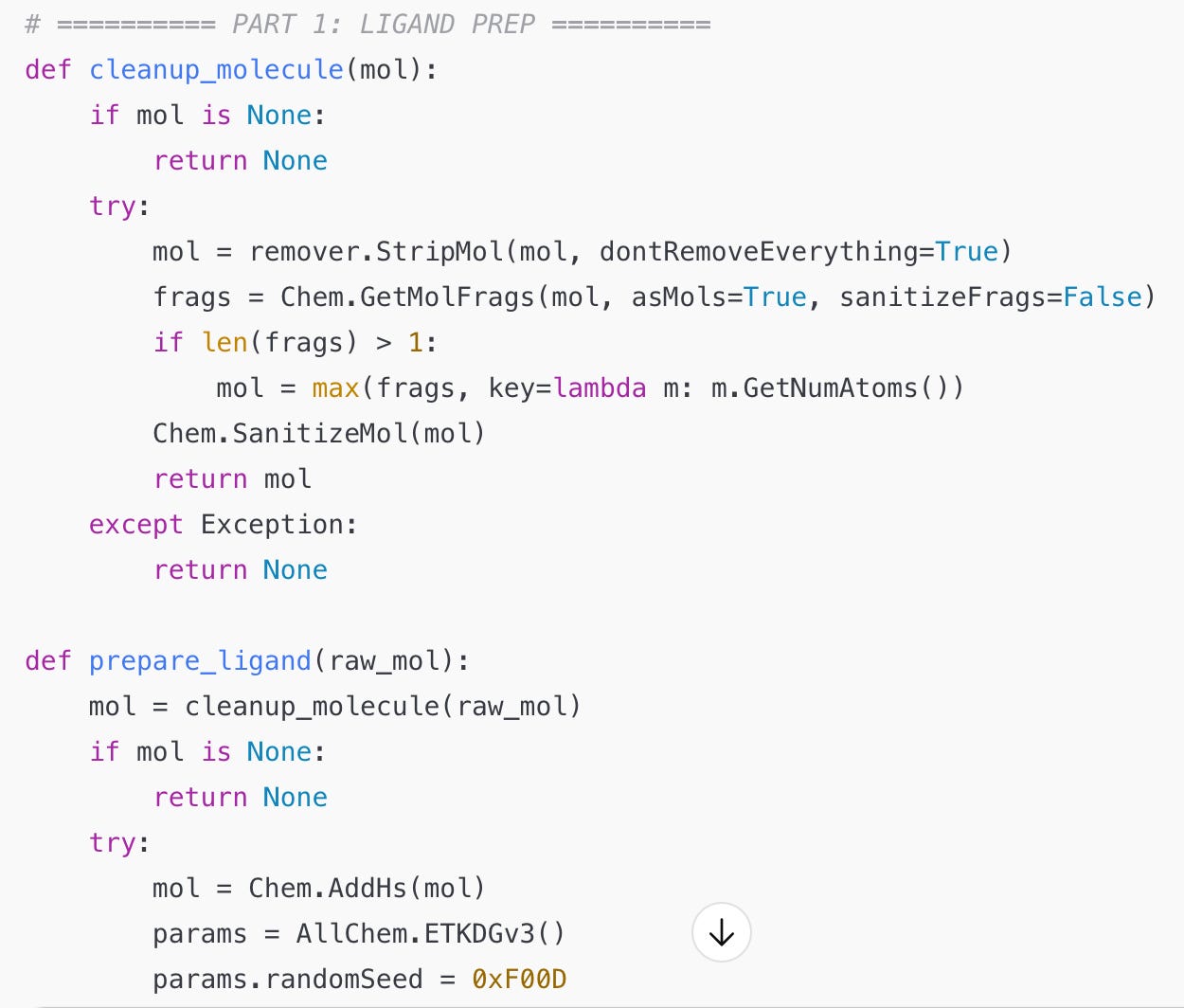

Integration Complexity: Connecting RDKit conformer generation → OpenMM/OpenFF energy screening → AutoDock Vina docking required solving dependency conflicts between OpenBabel, Meeko, and modern Python environments. We also hit classic issues like Python 2 Unicode encoding failures when processing compound names with non-ASCII characters - exactly the kind of low-level debugging that pulls you away from actual chemistry and can be a time sink. The final pipelines handle everything from SMILES to ranked poses.

Validation Data Access: The tools automated ChEMBL dataset downloads with IC50 filtering to create proper validation sets using the ChEMBL REST API. No more manual CSV exports - the system pulls EGFR inhibitors with activity ranges from 0.1-100 nM for meaningful benchmarking.

What Actually Works in Practice:

Hybrid Force Field Approaches: We implemented OpenFF Sage for small molecule energetics combined with RDKit’s conformer generation tools - using RDKit for efficient coordinate manipulation but OpenFF for energy evaluation. This avoided the limitations of pure RDKit MMFF94 force fields while leveraging RDKit’s mature molecular manipulation tools.

Error Recovery Architecture: The most valuable addition wasn’t better algorithms - it was comprehensive error handling. Corrupted PDB files and dependency version conflicts happen constantly. Building workflows that gracefully degrade and continue processing saved weeks of manual intervention. Building diagnostics so that protocols don’t fail silently was also an important part of the iterative process.

Pragmatic Tool Selection: When the standard AutoDock preparation tool (Meeko) had connectivity issues causing “hideously messed up” visualizations, I suggested RDKit-based alternatives with proper PDB→OpenBabel PDBQT conversion that preserved molecular topology. Sometimes the backup approach works better than the official one. This is one of those examples where having a human in the loop really helps, just to jolt the system into a different local minimum where it could explore a better solution. Needless to say, any expert knowledge that one might have either about the tools or the code is going to help. My personal dream for these LLM tools is to reach a point where the tools themselves ask for human intervention at the right stages instead of seeking single-point solutions which may be suboptimal or fail.

The AI Advantage for Non-Programmers

Let me emphasize this again because I think it’s so important: the current hype about AI focuses so much on the results that it ignores the many-fold benefits that come from the process. In a nutshell: Working with LLMs transformed how I approach computational problems. Instead of spending days debugging Python environments or wrestling with Unicode encoding errors in legacy code, I could focus on the chemistry. The AI handled:

Writing robust batch processing scripts with proper error handling

Integrating disparate tools (OpenMM, RDKit, AutoDock) into unified workflows

Debugging obscure file format issues and dependency conflicts

Creating validation pipelines with statistical analysis

The key insight: AI assistance works best when you understand the chemistry but don’t want to become a software engineer debugging code. I could specify what I needed scientifically, and the AI handled the implementation details.

What’s Missing for Production Use

There’s still too much brittleness in the results. They often fail not just for edge cases but even for more mainstream ones. For instance I had to go through several iterations before the code handled flexible HIV protease inhibitors with 10-15 rotatable bonds. There should be better intelligence built into the decision making process that handles such cases or exits gracefully and moves on to the next molecule without explicit instructions. I also mentioned above the tendency for the LLMs to come up with one-shot solutions without any human intervention. While this is the holy grail, it would actually be useful for the tools to be more interactive, to have more of a conversation with the user than just get stuck in plug-and-play routines. Ultimately an AI agent that does the process iteratively and spits out a comprehensive piece of working code would be ideal, but we aren’t there yet.

Notwithstanding these limitations, this is still tremendous progress relative to where we were only a short time ago. No more do computational chemists need to constantly focus on the “computational” part. Instead they can spend most of their time on the chemistry part. And even for those more interested in the computational part, these tools can enable them to create sophisticated, multi-stage pipelines that are most impactful instead of fussing about the basic foundations. The New World is already here.